Ethical and Responsible AI, in the post-ChatGPT era, is no longer a futuristic promise. In an enterprise setup, it has to be deeply embedded in our daily workflows, from enterprise automation to data governance and compliance. With the great power of Generative AI, comes even greater scrutiny. As organizations rush to adopt Generative AI, Large Language Models (LLMs), RAGs, and Autonomous Agents, they face an urgent need to align AI usage with core values: fairness, transparency, privacy, and accountability.

Table of Contents

Introduction: Why Ethics Must Lead the AI Revolution

We’ve crossed the inflection point. AI is no longer experimental—it’s exponential. As enterprises embed Large Language Models (LLMs), GenAI copilots, and autonomous agents into daily workflows, one question looms louder than any prompt: Are we doing this responsibly?

The challenge is no longer about building AI. It’s about building AI you can trust.

At CAIOz.com, we present “The Ten Commandments of Ethical and Responsible AI”—a 2025-ready enterprise playbook grounded in trust, accountability, and real-world execution. These aren’t just guidelines. They’re your governance backbone.

1. ⚖️ Fairness and Non-Discrimination

In the enterprise context, fairness is not just a moral obligation—it’s a strategic imperative. Organizations increasingly use AI to automate hiring, credit scoring, insurance underwriting, customer segmentation, and more. If left unchecked, these systems can inadvertently replicate historical inequalities encoded in data. The reputational, legal, and financial risks of biased AI are enormous. Discriminatory systems can violate regulations like the Equal Credit Opportunity Act (ECOA) or EEOC guidelines, and they can erode consumer trust permanently. Embedding fairness ensures that enterprises foster inclusive growth, mitigate risk exposure, and build AI systems that reflect ethical and societal expectations. In sectors like banking, healthcare, and government services, bias in AI can cost lives, not just dollars—making fairness foundational to AI governance.

How to implement

- ✅ Conduct algorithmic bias audits regularly.

- ✅ Apply techniques like adversarial debiasing, reweighing, or fair re-sampling.

- ✅ Eliminate proxy variables (like ZIP codes for race).

- ✅ Benchmark models on sensitive categories: gender, race, disability, age.

Toolkits

2. 🔍 Transparency and Explainability

For enterprises deploying AI at scale, transparency and explainability are non-negotiable enablers of accountability, regulatory compliance, and user adoption. Business decisions driven by AI—whether in fraud detection, customer recommendations, or loan approvals—must be explainable to internal stakeholders and regulators, auditors, and customers. Without transparency, AI becomes a black box, eroding user trust and impeding debugging, iteration, or redressal. In highly regulated sectors like finance or healthcare, explainability is a legal requirement under mandates like GDPR’s “right to explanation.” Transparent AI also empowers non-technical teams—like legal, risk, compliance, and customer support—to participate in governance, ensuring AI doesn’t operate in a vacuum. Simply put, unexplainable AI is unaccountable AI, and that’s a risk no enterprise can afford.

How to implement

- ✅ Use Model Cards and Datasheets for Datasets for documentation.

- ✅ Integrate LIME or SHAP for explainability in UI and logs.

- ✅ Maintain decision logs and explain uncertainty to end-users.

Checklist

Does your end-user understand how the AI arrived at the result?

3. 👤 Accountability and Human Oversight

AI without human oversight is automation without responsibility. In an enterprise, every algorithmic decision carries a business impact—affecting customers, employees, compliance obligations, and shareholder value. Accountability ensures that someone—not something—remains answerable when outcomes go awry. As enterprises scale autonomous workflows in customer service, procurement, HR, and legal, maintaining a “human-in-the-loop” is vital to flag anomalies, handle exceptions, and uphold ethical checks. Establishing clear ownership for every AI system fosters clarity in responsibilities, escalation paths, and performance metrics. In an era of deepfake threats, hallucinated GenAI responses, and model drift, human accountability is the guardrail that prevents intelligent systems from becoming irresponsible actors. It’s not about controlling AI—it’s about staying in control of the enterprise.

How to implement

- ✅ Assign a human custodian for every AI system.

- ✅ Embed Human-in-the-Loop (HITL) in critical workflows (e.g., hiring, loan approvals).

- ✅ Keep escalation workflows for AI overrides.

- ✅ Ensure traceable logs for audit and appeal.

4. 🔐 Privacy and Data Stewardship

Data is the new oil—but without proper stewardship, it’s also a liability. Enterprises rely on massive datasets to fuel AI, often including personal, behavioral, financial, and biometric information. Mishandling this data can lead to regulatory fines, consumer backlash, and irreversible reputational harm. In the age of GDPR, HIPAA, and India’s DPDP Act, privacy is not just an ethical consideration—it’s a legal battlefield. Proper data governance protects organizations from compliance failures, supports secure data sharing across departments and partners, and preserves consumer trust. By minimizing data use, anonymizing sensitive elements, and aligning data usage with clearly defined purposes, enterprises can innovate responsibly.

AI must respect the sanctity of data—because privacy violations are not just legal risks, they are trust bankruptcies.

How to implement

- ✅ Apply data minimization, anonymization, and differential privacy.

- ✅ Ensure compliance with GDPR, HIPAA, DPDP Act (India).

- ✅ Audit data pipelines for leaks and re-identification risks.

Frameworks

5. 🛡️ Robustness and Security

As enterprises operationalize AI systems, robustness becomes critical to avoid catastrophic failures, adversarial attacks, or unintended behaviors. A faulty algorithm can crash a stock price, deny healthcare benefits, or misroute millions of dollars. Moreover, AI systems are uniquely vulnerable to adversarial inputs, data poisoning, and model drift—making traditional software QA practices insufficient. Robust AI systems must withstand real-world volatility, evolving data landscapes, and malicious manipulation. Security is equally vital: exposed models and endpoints are targets for IP theft, model inversion attacks, or prompt injection. Enterprises must prioritize resilience not just to preserve uptime, but to ensure safety, reliability, and compliance.

In mission-critical environments, robustness is not a feature—it’s a prerequisite for survival.

How to implement

- ✅ Test for adversarial attacks, model drift, and data poisoning.

- ✅ Set up anomaly detection and runtime guardrails.

- ✅ Use model versioning and integrity checks.

Tools

6. 🌍 Inclusivity and Accessibility

Modern enterprises serve global, diverse audiences. AI that’s not inclusive is AI that fails by design. Whether you’re building a virtual assistant, e-learning platform, healthcare chatbot, or banking portal, your AI must work across languages, geographies, disabilities, and age groups. Failing to ensure accessibility limits your market reach, marginalizes vulnerable populations, and may even violate laws like the Americans with Disabilities Act (ADA). Inclusivity also extends to datasets—models trained only on Western English-speaking corpora will underperform in regional or minority contexts. By proactively designing for inclusivity, enterprises unlock new customer segments, enhance brand equity, and uphold their DEI (Diversity, Equity, Inclusion) mandates.

Accessible AI is usable AI—and usability is the currency of trust and adoption.

How to implement

- ✅ Localize models to regional dialects and scripts.

- ✅ Ensure compatibility with screen readers, alt-text, and assistive tech.

- ✅ Design inclusive UX with diverse personas in mind.

Checklist

Is your AI usable by a rural farmer, a senior citizen, or someone with a disability?

7. 🍃 Environmental Responsibility

Training large AI models can emit tons of carbon dioxide, rivaling that of international flights. As enterprises race to deploy GenAI, LLMs, and multimodal systems, sustainability becomes a core responsibility. Regulators, customers, and investors are increasingly scrutinizing the environmental impact of AI. Greenwashing will no longer pass; real data, such as carbon footprint disclosures and energy consumption metrics, are expected. Using model distillation, quantization, transfer learning, and cloud-native green infrastructure can significantly reduce this footprint. Moreover, optimizing compute usage and deprecating idle models avoids waste. For ESG-compliant enterprises and those pursuing net-zero targets, responsible AI deployment is a cornerstone of sustainable innovation.

If AI is the brain of the enterprise, the environment is its lifeblood—don’t compromise either.

How to implement

- ✅ Track the carbon footprint of model training and inferencing.

- ✅ Use distilled, quantized, or low-rank models.

- ✅ Decommission unused models and choose green cloud options.

Best Practices: Energy-efficient training using Hugging Face Optimum, Azure Green Compute.

8. 🤝 Purpose Alignment and Social Benefit

Not all AI is good AI. Just because something can be built doesn’t mean it should. Purpose alignment ensures that every AI initiative serves a constructive, ethical, and socially beneficial objective. Enterprises must resist the temptation to deploy AI for surveillance, misinformation, deepfake generation, or psychological manipulation. AI that undermines societal well-being—even if profitable—will attract backlash, bans, and disillusionment. Aligning AI with human values, public benefit, and enterprise purpose helps build long-term trust with customers, regulators, and civil society. Mapping projects to the UN SDGs or ESG goals can also signal leadership in ethical innovation.

Purpose-driven AI is a brand differentiator—one that outlasts short-term commercial gain.

How to implement

- ✅ Use a purpose alignment checklist before every project.

- ✅ Prohibit surveillance, deepfake creation, misinformation, and psychological manipulation.

- ✅ Align with UN SDGs, ESG goals, and social impact metrics.

Audit Tip: Ask “Would I be okay if this system impacted me or my loved ones?”

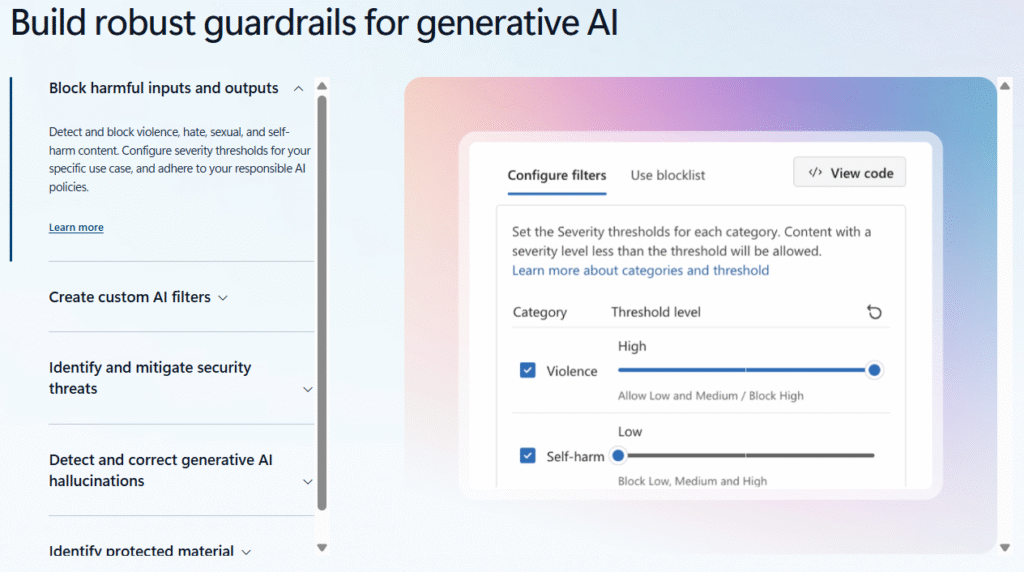

9. 🛡️ Security and Misuse Prevention

AI systems are dual-use technologies—tools that can help or harm depending on who uses them and how. That’s why misuse prevention is a critical control surface for AI governance. A generative model trained to summarize research can also generate phishing emails, fake news, or offensive content. Without proper access controls, prompt filtering, and abuse monitoring, even well-intentioned AI tools can become liabilities. Enterprises must invest in red teaming, input validation, prompt injection mitigation, and strict identity/authentication protocols to preempt misuse. Defining “acceptable use” and proactively blocking high-risk queries is essential. Misused AI isn’t just a technical failure—it’s a corporate scandal waiting to happen.

How to implement

- ✅ Use role-based access control (RBAC), JWT, and IAM policies.

- ✅ Employ prompt filtering, toxicity classifiers, and input sanitization.

- ✅ Red-team every AI system before deployment.

Toolkit

10. 🔁 Continuous Governance and Lifecycle Monitoring

AI development doesn’t stop at deployment—that’s where governance begins. Unlike traditional software, AI systems evolve post-release due to data drift, model degradation, and environmental dynamics. Continuous governance ensures that models remain compliant, performant, and ethical across their lifecycle. Enterprises must embed Responsible AI checkpoints into each phase—from data curation to model training, deployment, retraining, and sunset. Regular performance monitoring, bias audits, ethical reassessments, and incident documentation are critical. A robust governance model—backed by an AI Ethics Council, automated dashboards, and compliance protocols—ensures that AI remains a force for good as it scales.

In the enterprise, responsible AI isn’t a finish line—it’s a daily practice.

How to implement

- ✅ Form an AI Ethics Council or a Responsible AI Review Board.

- ✅ Introduce RAI checkpoints at every ML lifecycle stage:

- Data sourcing

- Model training

- Deployment

- Post-deployment feedback loop

- ✅ Publish annual AI Impact & Compliance Reports.

📄 Governance and Compliance Framework

Roles & Structures:

- Chief AI Officer (CAIO): Overall accountability.

- Responsible AI Committee: Strategic reviews and escalations.

- Ethics & Risk Office: Enforcement and disciplinary actions.

Compliance Tools:

- ✅ AI Risk Assessment Form

- ✅ Responsible AI Scorecard (per project)

- ✅ Data Sensitivity Matrix

- ✅ Model Cards for every deployed system

📌 Summary: Why CAIOs Must Prioritize Responsible AI in 2025

Without trust, AI fails. Without ethical guardrails, AI becomes dangerous. This Responsible & Ethical AI Framework ensures your AI strategy is future-ready, legally compliant, and socially aligned. From bias-free models to energy-efficient deployments, each principle paves the way for human-centric AI adoption at scale.

🌟 Your role as CAIO is not just about scaling AI, but scaling it responsibly.

🎯 Adopt this framework. Share it across your teams. Embed it into your DevOps pipelines.

Download The CAIOz Responsible AI Checklist

📘 Download the Ultimate AI Governance Checklist

The Ten Commandments of Ethical and Responsible AI — your 2025 playbook for AI leadership, governance, and scale.

Trusted by CAIOs, Digital Architects, and Governance Leaders Worldwide.

🧭 Guide Note: Using This Responsible AI Checklist

Part of the CAIOz.com Leadership Charter | Version 2025

AI is no longer a pilot experiment. It’s now your enterprise’s co-pilot. But scale without ethics is chaos waiting to happen.

This checklist isn’t just a theoretical framework. It’s a hands-on governance tool curated for real-world AI deployment—built around 10 core principles every modern organization must operationalize to avoid reputational damage, legal exposure, or stakeholder distrust.

- ✅ Diagnostic questions for internal audits and governance reviews

- 🔍 Clarified interpretations of what each question truly means

- 📘 Sample responses to help your teams baseline and improve

At CAIOz.com, we believe Responsible AI isn’t a compliance checkbox—it’s a leadership signal. This checklist was built with that mindset.

Best used by:

- • CAIOs and AI Leads: As a governance compass to guide responsible scaling

- • ML Engineers and Architects: To bake ethics into design, not afterthoughts

- • Risk, Legal, and Audit Teams: For cross-functional transparency and control

- • Transformation Offices and Trainers: To build internal awareness and culture

We’ll continue to refine this with the CAIOz community. For the latest editions, use cases, and toolkits—visit www.caioz.com and stay ahead of the curve.

Because in a world where anyone can deploy AI, the real differentiator is who does it responsibly.

Also Read

- Banking 3.0 | Sentiment Analysis for Customer Feedback Solution

- Staffing 3.0 | Part I — Empathy Powered by Generative AI

- Telecom 6.0: How GenAI Is Rebooting the Powerful Telco Enterprise

- Pharma 5.0 | 20 Powerful Generative AI Ideas Transforming the Pharma Industry

- The Internet of Beings (IoB): 8 Powerful Use Cases of Industry 5.0 and Agentic AI

Very Insightful article. Thanks